Project Overview

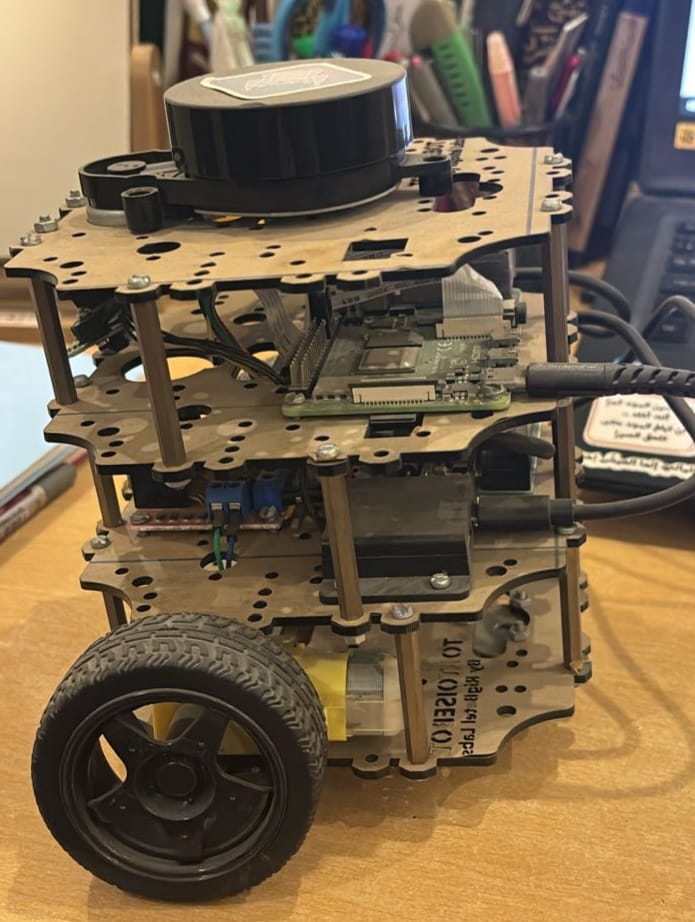

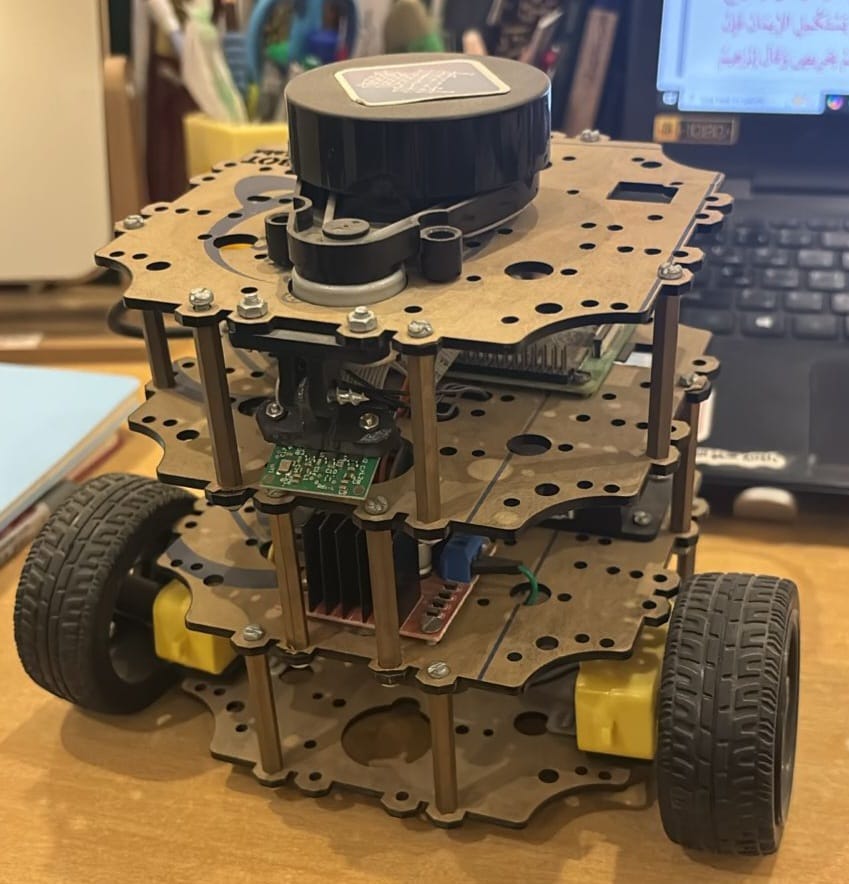

This checkpoint project involved the complete assembly and configuration of a physical TurtleBot3 robot platform from hardware components. The project bridged the gap between simulation and real-world robotics by working with actual hardware, sensors, and embedded systems.

Successfully integrated both ROS1 and ROS2 navigation stacks on Raspberry Pi, implementing SLAM algorithms to enable the robot to autonomously map unknown environments and navigate without predefined waypoints.

My Role

I completed the entire hardware-to-software pipeline for this project, including:

- Physically assembling the TurtleBot3 hardware from individual components

- Configuring Raspberry Pi as the robot's onboard computer

- Installing and configuring both ROS1 (Noetic) and ROS2 (Foxy/Humble)

- Implementing SLAM for autonomous environment mapping

- Testing and validating autonomous navigation capabilities

Key Features & Implementation

Hardware Assembly & Integration

Assembled the complete TurtleBot3 platform from individual mechanical, electrical, and sensor components. Integrated motors, motor controllers, LiDAR sensor, IMU, and Raspberry Pi into a functional mobile robot platform with proper wiring and power management.

Raspberry Pi Configuration

Set up Raspberry Pi as the robot's embedded computer, installing Ubuntu and configuring it for robotics applications. Established network connectivity, installed ROS packages, and configured hardware interfaces for sensor data acquisition and motor control.

ROS1 & ROS2 Dual Integration

Successfully integrated both ROS1 (Noetic) and ROS2 (Foxy/Humble) navigation stacks on the same system. This provided hands-on experience with both legacy and modern ROS ecosystems, understanding the architectural differences and migration strategies between versions.

SLAM-Based Autonomous Mapping

Implemented SLAM algorithms (Gmapping and Cartographer) to enable autonomous environment exploration and map generation. The robot navigated unknown spaces, processed LiDAR data in real-time, and built accurate 2D occupancy grid maps without predefined paths or manual control.

Technologies Used

Project Photos

Impact & Learning Outcomes

This project provided invaluable hands-on experience bridging the gap between simulation and real-world robotics. Working with physical hardware presented unique challenges including sensor noise, hardware limitations, and real-time performance constraints that don't exist in simulation.

- Practical hardware assembly and integration skills

- Embedded systems configuration and management

- Understanding differences between ROS1 and ROS2 architectures

- Real-world SLAM implementation and debugging

- Dealing with real sensor data, noise, and hardware constraints